|

||||||||||||||||||||||

![Australia's First Online Journal Covering Air Power Issues [ISSN 1832-2433] Australia's First Online Journal Covering Air Power Issues [ISSN 1832-2433]](APA/APA-Title-Analyses.png) |

||||||||||||||||||||||

![Sukhoi PAK-FA and Flanker Index Page [Click for more ...]](APA/flanker.png) |

![F-35 Joint Strike Fighter Index Page [Click for more ...]](APA/jsf.png) |

![Weapons Technology Index Page [Click for more ...]](APA/weps.png) |

![News and Media Related Material Index Page [Click for more ...]](APA/media.png) |

|||||||||||||||||||

![Surface to Air Missile Systems / Integrated Air Defence Systems Index Page [Click for more ...]](APA/sams-iads.png) |

![Ballistic Missiles and Missile Defence Page [Click for more ...]](APA/msls-bmd.png) |

![Air Power and National Military Strategy Index Page [Click for more ...]](APA/strategy.png) |

![Military Aviation Historical Topics Index Page [Click for more ...]](APA/history.png)

|

![Intelligence, Surveillance and Reconnaissance and Network Centric Warfare Index Page [Click for more ...]](APA/isr-ncw.png) |

![Information Warfare / Operations and Electronic Warfare Index Page [Click for more ...]](APA/iw.png) |

![Systems and Basic Technology Index Page [Click for more ...]](APA/technology.png) |

![Related Links Index Page [Click for more ...]](APA/links.png) |

|||||||||||||||

| Last Updated: Mon Jan 27 11:18:09 UTC 2014 | ||||||||||||||||||||||

|

||||||||||||||||||||||

| Cutting

Through the Tangled Web: An Information-Theoretic Perspective on Information Warfare Air Power Australia Analysis 2012-02 20th October 2012 |

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| A

Monograph

by Lachlan N. Brumley, BSE(Hons), Dr Carlo Kopp, AFAIAA, SMIEEE, PEng, Dr Kevin B. Korb, SMIEEE, MAAAI Text, computer graphics © 2012 Lachlan Brumley, Carlo Kopp, Kevin Korb |

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

Information Warfare in social

systems has a long and colourful history dating back to antiquity.

Despite the plethora of well documented historical instances, and well

known instances in the biological domain, Information Theory based

mathematical formalisms are a very recent development, produced over

the last two decades. Depicted is an RC-135VW Rivet Joint electronic

and signals intelligence aircraft of the 763rd Expeditionary

Reconnaissance Squadron in South-West Asia, 2009, a critical asset in

both theatre and strategic Information Operations (U.S. Air Force

image).

|

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Index |

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

The Information Warfare Problem |

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

It is widely accepted that the term “Information Warfare” was first used by Thomas Rona in 1976 [3] when discussing the advantages of targeting the information and communication systems an opponent depends upon. This general area was later explored by a number of researchers, with the National Defense University establishing a School of Information Warfare Studies in 1992, and the infoWarCon series of conferences launched shortly thereafter. The usage of the term “Information Warfare”, for better or worse, encompasses the full gamut of techniques whereby information is employed to gain a competitive advantage in a conflict or dispute. The problems raised by “Information Warfare” are fundamental to nature and are applicable not just to social or computing systems, but to any competitive survival situation where information is exploited by multiple players. In any contest between two machines, for example between a spam generator and a spam filtering engine, both entities obey the very same constraints obeyed by biological organisms exploiting information while competing for survival. This usage amounted a “soft” definition of “Information Warfare”, followed in 1997 by a formal definition, produced by the US Air Force. The US Air Force definition focussed primarily on military applications in social systems [60]. The formal account has provided a basis for elaboration in the independently developed information-theoretic formalisms of Borden [7] and Kopp [25], which identified “four canonical strategies of Information Warfare” (see §4); “strategy” in both instances was defined in the game-theoretic sense and was related to Shannon’s information theory. Further research has aimed to establish the range of environments in which the canonical strategies apply and tie them to established research in areas such as game theory, the Observation Orientation Decision Action loop, and the theory of deception, propaganda, and marketing. Applications of the theory to network security and military electronic warfare have also emerged. Perhaps the most surprising application of the four canonical strategies has been in evolutionary biology. Very little effort was required to establish that the four strategies are indeed biological survival strategies, evolved specifically for gaining an advantage in survival games. In the evolutionary arms race pursued by all organisms, the use of information is a powerful offensive and defensive weapon against competitors, prey and predators. The four canonical strategies of Information Warfare provide a common mathematical model for problems that arise in biological, military, social and computing systems. This allows, importantly, a unified approach to Information Warfare, simplifying automation. For example, implementing a robust security strategy requires a common model for analyzing and understanding problems which arise from a potentially wide range of an opponent’s offensive and defensive uses of information. In this paper we shall review recent research in this area and provide a comprehensive description of the information-theoretic foundations of “Information Warfare”. The paper does not aim to address broader issues in strategy and how “Information Warfare” may aid or hinder existing paradigms of conflict, nor will this paper attempt a deeper study of the psychological dimensions of “Information Warfare”. |

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

What is meant by “Information”? |

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

In the context of Information Warfare, information can be either a weapon or a target. Somewhat surprisingly for this literature, detailed in further discussion, the definitions and usage of “information” are often left vague. Information

is defined as “knowledge communicated concerning some particular fact,

subject, or event; that of which one is apprised or told; intelligence,

news” by the Oxford English Dictionary [54]. Another definition from

the

same source states that it is “separated from, or without the

implication of, reference to a person informed: that which inheres in

one of two or more alternative sequences, arrangements, etc., that

produce different responses in something, and which is capable of being

stored in, transferred by, and communicated to inanimate things”. The

first definition equates information with news or intelligence

regarding

a fact, subject or event, while the second describes it as data which

can be stored and communicated by machines. Combining these

definitions,

we may say that information provides knowledge of an object, event or

phenomenon that can be stored or communicated by people or machines.

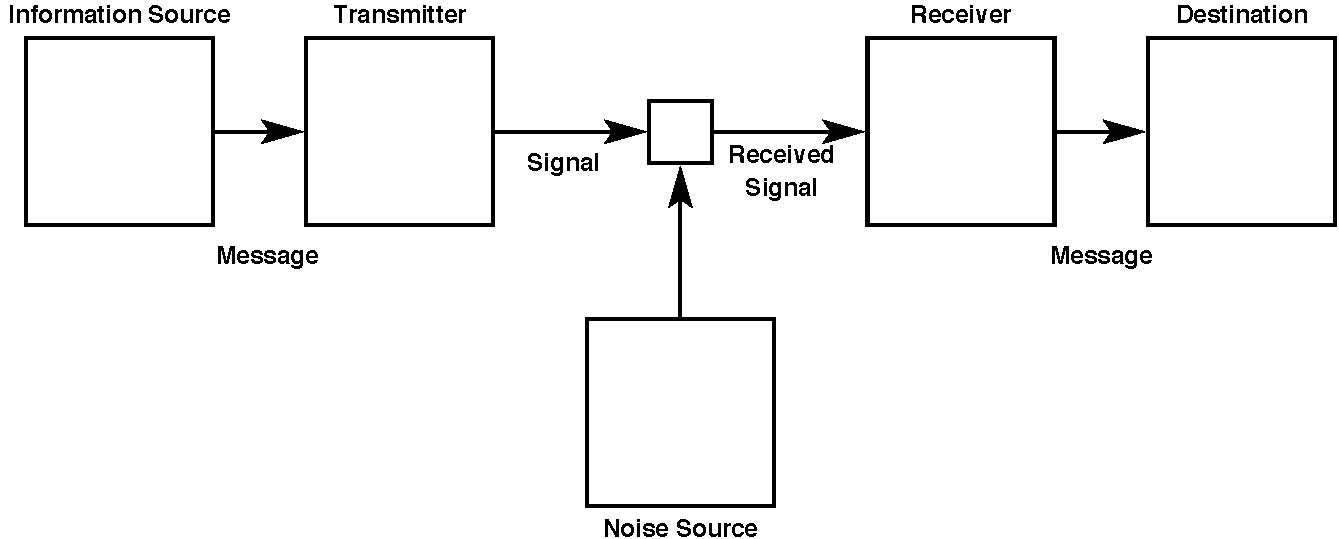

Figure 1: A General Communication System (Shannon, 1948)

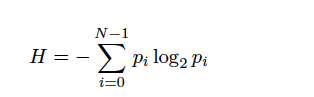

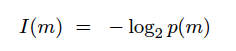

The mathematical definition of information comes from Shannon’s [52] work on communication theory, which proposes an abstract model of communication represented by an information channel between an Information Source and a Destination [53]. This model (Figure 1) describes a generalisation of communication and consists of five parts — the Information Source, the Transmitter, the Channel, the Receiver and the Destination. The Information Source selects the Message to send from a set of possible messages. The Message is mapped by the Transmitter into a Signal and transmitted over the communication channel. The channel is simply the medium that carries the signal, and its physical instantiation depends upon the communication method. The channel is non-ideal, and therefore impairments which damage the signal are inevitably introduced during transmission. These impairments may be additive, such as noise or other signals, or may distort the signal. A frequent model for such impairments is simple additive white Gaussian noise (AWGN), due to its wide applicability in electronic communications and to its mathematical tractability. In Shannon’s model noise is produced by a Noise Source that is connected to the channel. The Receiver collects the signal from the channel and performs the inverse of the Transmitter’s mapping operation, converting the signal with any impairments back into a Message, which is then passed on to the Destination. In communication theory, information is a measure of the freedom of choice one has when selecting a message for transmission [53]. According to this definition, highly unlikely messages contain much information, while highly predictable messages contain little information. The Shannon information measure (Shannon entropy), H, of a message consisting of N symbols appearing with respective probabilities pi is (Equation 1):

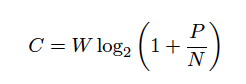

Shannon and Weaver provide a simple demonstration of how the probability of a message’s selection affects the amount of information in the transmitted message [53]. Suppose there is a choice between two possible messages, whose probabilities are p1 and p2. The measure of information, H, is maximised when each message is equally possible, that is p1 = p2 = 1/2. This occurs when one is equally free to select between the two messages. Should one message become more likely than the other, H will decrease. As a message becomes more probable, the value of H decreases toward zero. Shannon [52] also demonstrated that the capacity of a noisy communications channel of a given bandwidth is bounded by the relationship in Equation 2, where C is the channel capacity, W is the channel bandwidth, P is the power of the signal and N is the noise power. The channel capacity is measured in bits per second, the bandwidth in Hertz and the power and noise are measured in Watts. Clearly, how much information a channel can carry can be manipulated via the channel’s bandwidth and signal-to-noise ratio. Given a fixed channel capacity, information transmission will be maximised if the actual code lengths of messages are equal to their Shannon information measure, thus, using an “efficient code” [53].

Shannon’s definition of information is a purely quantitative measure, determined by the probability of the message’s transmission [55]. Therefore, information as defined by Shannon is a property of a communication signal and should not be confused with the semantics (meaning) of the signal. Weaver linked Shannon information to thermodynamic entropy [55]. Entropy is used in the physical sciences as a measure of the level of organisation and structure. A system with high entropy is highly chaotic or random, while low entropy indicates a well-ordered and predictable system. Following Weaver, Shannon entropy reports how organised the information source is, which determines the rate of information generation [53]. Shannon’s model relativises information to the prior probability distribution of the receiver. Prior probabilities report the predictability of the message and thus its information content, or “surprise value”. Another definition of information, similar to Shannon’s, is provided by Wiener [61]. Wiener describes information from a cybernetic point of view, where it is a property of the signals communicated between the various components of a system, describing the system’s state and operations. While Shannon’s definition was only applied to communication, Wiener applies his to the control and communication processes in complex mechanical, electronic, biological and organisational systems. Wiener also defines information mathematically in terms of probability, where the probabilities describe the choice between alternatives. Thus, Wiener’s definition also relates information and entropy; in particular, Wiener’s information measures negative entropy, where the information in a communicated message is a measure of the order, or lack of randomness, in the message. Shannon’s measure, on the other hand, was the information source’s entropy, describing the uncertainty of the message being transmitted, which can be interpreted as the number of bits needed in an efficiently utilised (noiseless) channel to report the state of the information source. Thus, in Shannon’s case information might be described as a potentiality, a measure of how likely a signal is to occur. In both these definitions information is a property of the communicated signal. Bateson [1] instead defines information as a “difference which makes a difference”. According to Bateson, this definition is based upon Kant’s assertion that an object or phenomenon has a potentially infinite number of facts associated with it. Bateson argues that sensory receptors select certain facts from an object or phenomenon, which become information. Bateson suggests that a piece of chalk may be said to have an infinite number of “differences” between itself and the rest of the universe. These differences are mostly useless to an observer; however, a few of these differences are important and convey information to the observer. The filtered subset of important differences for the chalk could include its colour, location, shape and size. In Bateson’s definition of information, which differences are filtered to become information depends upon the perspective of the interested party. Determining or quantifying the value of an item of information is thus dependent upon the observer, the circumstances of that observer, and the time at which the information is acquired. For instance, knowing which stocks will gain in the market before other observers know can yield a higher value than learning this information at the same time as others. Learning such information under circumstances where it cannot be exploited inevitably diminishes its value. In games of incomplete information, discussed later, the value of information in reducing uncertainty can be related directly to the payoff in the game [42, 18]. If the information results in a high payoff, otherwise denied, the information is of high value. If the game is iterated or comprises multiple turns or steps, the time at which the information is acquired determines the manner in which the value of information changes over time. In this sense, Bateson’s representation is a qualitative mapping of what modern game theory tells us indirectly about the context and time variant properties of the value of information. This paper will not explore the problem of how to quantitatively determine the value of an item of information, an area well studied in recent game theory, as that problem is distinct from problems arising from the use of information to gain an advantage in a contest or conflict. Boisot [6] provides a different model of information, arguing that what has previously been called information can instead be considered three different elements — data, information and knowledge. Entities first observe and make sense of data, converting it to information, which is then understood and incorporated into the entity’s knowledge base. Data describes the attributes of objects, while information is a subset of the data, produced by the filtering of an entity’s perceptual or conceptual processes. Boisot’s definition of information is more psychologically oriented and much broader than the mathematical definitions of Shannon or Wiener.1 Definitions of “information” fall into the categories of quantitative or qualitative: the strictly mathematical definitions of Shannon and Wiener versus ordinary language definitions, such as those of the Oxford English Dictionary, Bateson and Boisot. From a mathematical perspective, information is a property of a communicated signal, determined by the probability of that signal. The more likely a signal is, the less information it has, while the less likely it is, the more surprising its arrival and so the more information it possesses. The informal definitions consider information to be descriptions of some aspects of the world that can be transmitted and manipulated by biological organisms and machines. Of course, quantitative and qualitative definitions are potentially compatible and can be used jointly. When “information” is used in the context of Information Warfare, it is commonly under its qualitative meaning. For example, when describing Information Warfare against computer systems, information may be used to refer to a computer program, stored data or a message sent between systems. The qualitative definitions of information are, however, vague, leading to conflation with distinct concepts such as knowledge, data and belief. Applying Shannon’s definition of information allows Information Warfare to be studied more rigorously. Whereas the mathematical definitions treat information as a property of a communicated signal, under a qualitative interpretation it is likely to be confused with the semantics of the signal. In any case, the term “information” as it appears in much of the literature is context sensitive and that context must be interpreted carefully if the meaning of the text is to be read as intended (i.e., with high signal-to-noise ratio). |

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Processing of Information by an Entity |

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

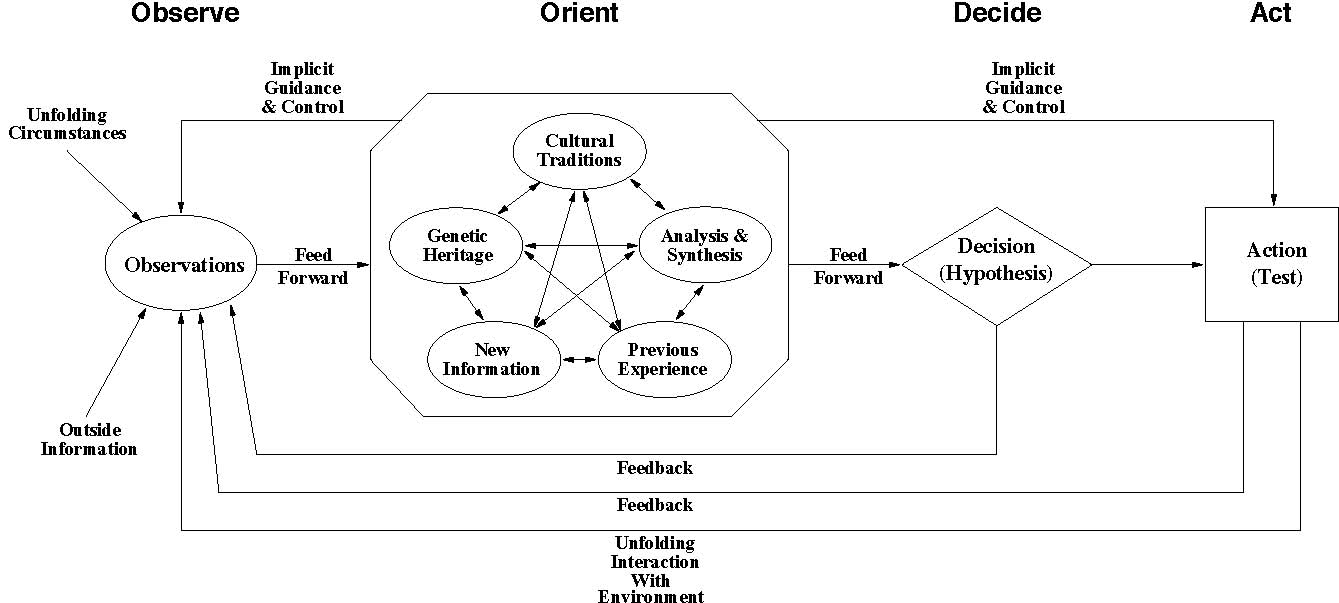

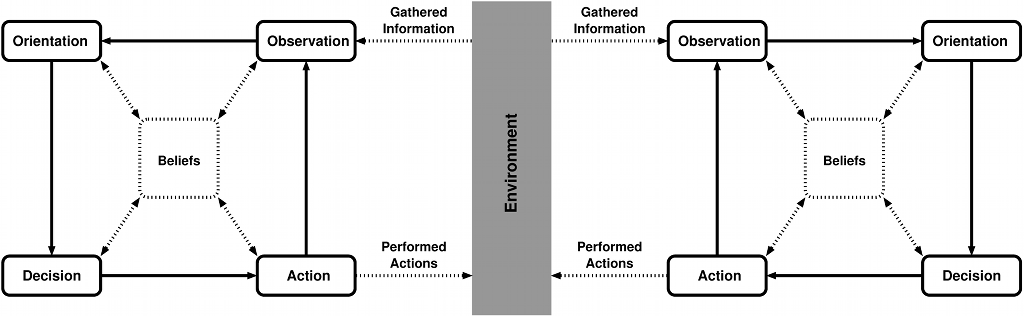

The target of an action to manipulate or impair information used by a competing entity is the victim’s decision-making. From a game theoretic perspective the intention is to compel or entice the victim into making choices which are to the advantage of the attacker. Understanding such mechanisms is therefore important to understanding how Information Warfare produces its intended effect. Any decision-making mechanism is inherently constrained by the information collection and processing behaviours of the system which it is part of. There are many conceptual models which attempt to describe the information collection and processing behaviours of entities. Such models provide a relatively simple representation of the decision-making process, against which the effects of Information Warfare on the entity’s decision-making process can be studied. One such model of the decision-making cycle is the Observation Orientation Decision Action (OODA) loop model [10, 48], which we have employed in our research due to its generality, and because it is widely used and understood. The OODA loop model is a method of representing the decision-making and action cycles of an entity. It was originally developed to model the decision-making process of fighter pilots, however its generality makes it suitable for modeling most decision-making cycles. The OODA loop is commonly used to describe the decision-making process in both military and business strategy [56]. Boyd’s OODA Loop is a four step cyclic model, which describes the information gathering, decision making and actions of an entity, with earlier behaviour providing feedback to the current analysis and decision activities (Figure 2). The model breaks the continuous act of perception and its subsequent decision-making into four discrete steps, which is accurate for many entities or systems. The model can be adapted to systems which are not discrete. The loop begins with the Observation step, where the entity collects information about the state of its environment. This information may be collected with any sensors the entity possesses.

Figure 2:

Boyd’s OODA (Observation Orientation Decision Action) loop model (Boyd

1986). Note the feedback from present Decisions and Actions to future

Observations, as well as the control that Orientation has over

Observation and Action.

During the Orientation step, the gathered information is combined with the entity’s stored beliefs about the environment and itself, which may include previous experiences, cultural traditions, genetic heritage, and analysis and synthesis methods. All of this is used to update the entity’s internal model of its environment. The internal model represents the entity’s current understanding of the state of its environment and is a product of the entity’s perceptions, beliefs and information processing abilities. This internal model may or may not accurately match reality. Boyd [11] states that the Orientation step is the “schwerpunkt” (focal point or emphasis) of the OODA loop model, as an entity’s Orientation determines how it will interact with the environment, affecting how it Observes, Decides and Acts. After updating its understanding of its environment, the entity enters the Decision step, where it considers its potential actions and the expected outcomes of these actions. If the entity is a rational decision-maker, it will select the action or actions which it believes will lead to its most preferred outcomes. The possible actions and their expected outcomes that the entity develops are entirely a product of the entity’s model of its environment. Therefore, the entity will only consider actions it believes are possible and the outcomes it believes those actions will have. The entity’s beliefs therefore constrain its decision-making. During the Action step, the entity performs its selected action or actions. This interaction changes the state of the environment. Any changes the entity causes can be observed in future OODA loop cycles, along with changes caused by other entities. This functions as a feedback loop between the entity and its environment. Similar cyclical models of the decision-making process have also been proposed [43, 44, 49]. These models commonly describe the decision-making process as an ongoing feedback loop where an entity’s current beliefs guide how it collects and interprets new information, before basing its decisions upon these beliefs.

Figure 3: A simplified OODA loop model showing how two entities interact with each other through the environment. A typical and implicit assumption in the OODA loop model is that it involves players in a competitive game, whether one of complete or incomplete information. In either circumstance what information is perceived by these players, and how it is understood or misunderstood, determines the subsequent actions or moves by the players, and the eventual outcomes and payoffs in the game. In a game of perfect information a player who is unable to understand the environment due to internal processing errors (as we discuss later) will make poor choices in the game. Games of incomplete information are much more interesting, as they introduce uncertainty into the game. This results in some information possessing more value to a player than other information. That value in turn yields a payoff to the competing player, providing a direct benefit if information of high value can be rendered unusable to an opponent. This is an important nexus in the study of Information Warfare, connecting game theory and information theory and showing the fundamental motivation for the use of information against opponents in such contests. The relationship between games of incomplete information and Information Warfare was explored in some detail by Kopp [27], using the hypergame representation of Fraser and Hipel [18], with subsequent research by Jormakka and Mölsä [24] applying game theory models in relation to the OODA loop. The Boyd OODA loop model is valuable here as it suggests the study of how the four canonical strategies of Information Warfare specifically impair the information processing functions of a victim, and in turn how this impairs the victim’s decision process. In effect, it explains the inner workings of how impairments to information transmission and processing result in impairments to decision-making and thus failure to secure an optimal payoff in a game. Any interaction between entities, including communication, occurs through the environment. One entity’s actions manipulate the environment, and this may be perceived by other entities during their Observation steps. Figure 3 demonstrates how this exchange occurs between two entities, although there is no limit, theoretical or otherwise, to how many entities may interact in such a manner. The interactions which occur may be either the implicit or explicit result of the Actions of one entity. In Boyd’s model, information is collected during the Observation step before being processed and contextualised during the Orientation step. Any information and its derivative products are then used during the Decision step to guide the entity’s future Actions. Offensive acts of Information Warfare, or unintentional errors which produce similar outcomes, affect the victim’s Orientation or Observation steps [12]. The product of these actions may then affect current or future loop iterations, with intentional actions intended to produce the effect desired by the attacker. Since unintentional errors and Information Warfare attacks can have similar outcomes, Information Warfare attacks may be disguised as unintentional errors in order to temporarily or permanently conceal the attacker’s hostile intentions. Unintentional errors have various possible causes and, like Information Warfare, may affect an entity during its Observation and Orientation steps. During the Observation step, temporary or permanent errors may affect the entity’s sensors’ ability to correctly and promptly collect information. Errors during the Orientation step may affect how an entity processes and stores information. Intentional deception is a key element of Information Warfare attacks, where corrupted information is purposefully communicated to a victim in order to alter its behaviour in a specific manner. Entities with incorrect beliefs may also unintentionally deceive, by communicating corrupted information during what they believe is truthful communication (cf. [37]). If this deception is successful, the victim will develop incorrect beliefs similar to those the attacker possesses. Unintentional deception may be more effective than intentional deception, since the attacker’s behaviour will support the perceived veracity of the corrupted information it has communicated. The consistency between an entity’s statements and other behaviour during deception is called multi-channel support [20]. Such behaviour is implicitly available during unintentional deception, yet must be explicitly provided during intentional deception. A specific type of deception is self-deception, where an entity is both the attacker and victim of the deception attack. Self-deception allows an entity to intentionally manipulate its own beliefs to its own end and has been observed and studied in human decision-making [45, 50]. Self-deception is of interest as the mechanisms which impair the decision cycle are no different to those used in intentional deception, but also because a sophisticated attacker might aim to exploit or reinforce existing self-deception arising in the victim system [20]. Self-deception

affects the decision-making process during an entity’s Orientation step

[13], where conflicting beliefs are compared. The entity then

manipulates its beliefs to remove the conflict, corrupting its own

beliefs to remove the conflict and allow the decision-making process to

continue as normal. It is even possible that such corruption is

adaptive and even intentional. Trivers [58] has argued that

self-deception may aid deception, by allowing a deceiver to corrupt its

beliefs in order to provide multi-channel support for its deception

against others. Once the deception is complete, the entity may be able

to remove its incorrect beliefs and benefit directly from the

deception.

Regardless, self-deception may allow a person to resolve conflicting

beliefs, thereby reducing cognitive dissonance and the psychological

discomfort it causes [46]. Another argument suggests that

self-deception did not evolve as an adaptation, but is instead an

unintentional byproduct of one or more other cognitive processes or

structures [59]. It may, of course, be both. Instances of “situating the appreciation” present as interesting case studies. Some instances are demonstrably deception of the audience by Corruption and/or Subversion, where the author is intentionally avoiding or “spinning” the discussion of the consequences of previous poor decisions. However, many may represent instances of self-deception by Corruption and/or Subversion, intending to avoid cognitive dissonance by post-facto rationalisation. Often the deceptive aspect of “situating the appreciation” reflects an effort to reinforce existing self-deception arising in the victim audience, which may include the author [20]. The problems of self-deception, unintentional deception and other forms of dysfunctional information processing are connected with established research in psychology and sociology, dealing with statistical decision theory and information processing. The ability to process large volumes of data, which may or may not be rich in information, is inherently constrained by the computational capacity of the system processing this input, and the effectiveness of the algorithms employed for this purpose. In the contexts of sociology, psychology and Information Warfare, this problem has been studied by Toffler, Lewis and Libicki. Toffler identifies the social problems arising from ‘information overload’, where anxiety and social turmoil arise in populations exposed to conditions where the rate of social change and associated volumes of information to be processed exceed the available capacity [57]. Lewis explored the psychological effects of individuals exposed to volumes of information exceeding processing capacity. In a large survey of managers he found effects which he termed ‘Information Fatigue Syndrome’, resulting in “a weariness or overwhelming feeling of being faced with an indigestible or incomprehensible amount of information” with symptoms including “paralysis of analytical capacity”, “a hyper-aroused psychological condition”, and “anxiety and self-doubt” leading to “foolish decisions and flawed conclusions” [38]. Libicki earlier explored the same effect but arising from intentional Information Warfare attacks, as the “induced volume of useless information which hinders discovering the useful information” [39]. Libicki also notes that the possible responses to such a flood of information will either hinder the victim’s agility in adjusting to unexpected events or impose internal obstacles preventing the internal integration of new information [40]. A common thread running through all such research is that strong psychological effects arise when humans are confronted with the problem of processing volumes of information exceeding their capacity, or confronted with information which challenges earlier understanding or beliefs. In both instances the ability to effectively process the information to extract useful meaning is constrained, and the psychological effects arising exacerbate the problem [33]. Berne’s [4, 5] work in transactional analysis, which explores social games in human interaction and dysfunctional human behaviours, notes the frequency with which deception arises in human interactions. In most of the extensive collection of social games which Berne identifies, uncertainty in perceived players’ intent results in games of incomplete information, where deceptions or hiding of information are frequent. Davis in Heuer [21] argues that “the mind is poorly ‘wired’ to deal effectively with both inherent uncertainty (the natural fog surrounding complex, indeterminate intelligence issues) and induced uncertainty (the man-made fog fabricated by denial and deception operations).” This observation is consistent with our findings on the importance of self-deception, especially in an environment of uncertainty and intentional deception. Exploitation of human psychology is central to many established and well documented deception techniques, where weaknesses in how the victim analyses and integrates information are exploited commonly. Haswell’s [20] extensive study of classical deception techniques provides a wealth of examples. These examples have been explored within the framework of information theory based models by Kopp [28], a study later extended to deception techniques used in political and product marketing [30]. The study of information processing and decision mechanisms in a victim system thus provides the bridge connecting game-theoretic views of Information Warfare with the information-theoretic models which describe specific strategies of Information Warfare. While uncertainty in games of incomplete information produces value in information, which motivates players to attack or protect information channels and information gathering and processing mechanisms, game theory cannot tell us anything about how the information is processed by a victim, or how it might be manipulated by an attacker. Information theory can explain the various mechanisms through which a channel can be attacked, and how such attacks will impair a victim’s decision cycle, in turn determining a player’s choices in a game of incomplete information. |

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

What is Meant by “Information Warfare”? |

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

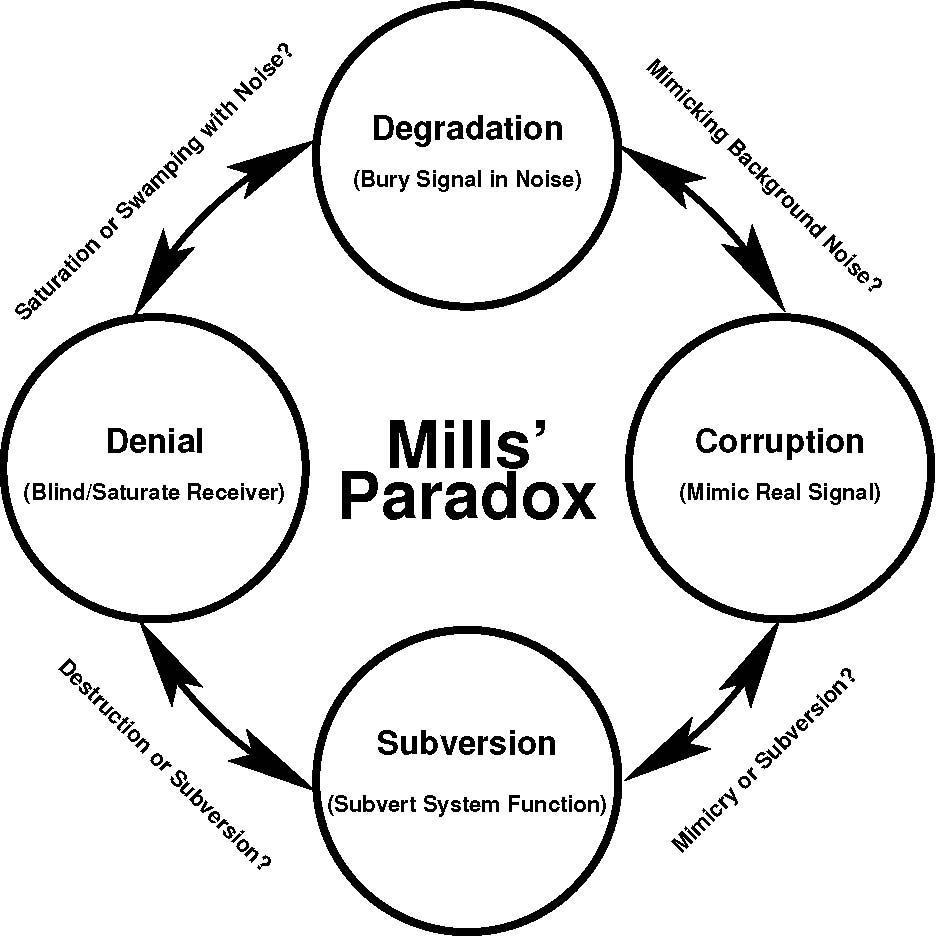

There are numerous and often divergent definitions of Information Warfare in current usage, reflecting in part the pervasive nature of the phenomenon and in part the differing perspectives of observers studying the problem. The various definitions of Information Warfare describe actions such as using information as a weapon, targeting information processing infrastructure and protecting one’s own information and information processing infrastructure. In order to provide a better understanding of Information Warfare, we examine some of the more prominent definitions, along with examples of possible offensive and defensive Information Warfare actions. The core elements of Information Warfare will be revealed through these examples. An early definition of Information Warfare by Schwartau [51] considers it from a social context, describing various attacks against information systems and telecommunications networks. Schwartau states that “Information Warfare is an electronic conflict in which information is a strategic asset worthy of conquest or destruction”, a definition covering only offensive actions. The overall goals of Information Warfare attacks are identified by Schwartau as the theft of information, modification of information, destruction of information and destruction of the information infrastructure, with the ultimate goals of acquiring money and power and generating fear. Schwartau points out that Information Warfare takes advantage of our modern societies’ dependence on information and information systems and is not restricted to governments or government agencies, as is the case with traditional warfare. Schwartau specifies three different classes of Information Warfare attacks, using a taxonomical approach that focuses on the type of target that is attacked. The first class of operations is Personal Information Warfare, in which individuals and their personal details, stored in electronic databases, are the targets. Schwartau describes it as “an attack against an individual’s electronic privacy”, in which the attacker views or manipulates data about the individual stored by various companies and government agencies. Schwartau points out that individuals cannot directly protect this information and will often have difficulty correcting any erroneous entries created during an attack. Such an attack could create a false outstanding arrest warrant or supply misinformation to blackmail the individual, although the most common use at this time appears to be “identity theft” typically to facilitate fraudulent use of credit cards. The second class of operations is Corporate Information Warfare, in which companies are targeted, typically by their competitors. Schwartau describes industrial espionage, spreading disinformation, leaking confidential information and damaging a company’s information systems as potential examples. Global Information Warfare is the third class of operations and its victims include industries, political spheres of influence, global economic forces, non-national entities and nations. Typical examples of acts within this category include theft of secrets, denial of technology usage and the destruction of communications infrastructure. Schwartau claims that “it would be stupid for a well-financed and motivated group to not attack the technical infrastructure of an adversary,” given the clear vulnerabilities, low risk and large reward of these attacks. Schwartau’s definition of Information Warfare covers only offensive actions that utilise or affect some sort of electronic information system, which implies that Information Warfare is a modern development. Schwartau’s decision to categorise Information Warfare attacks according to their intended victim recognises that all victims are not equal and that the motivations for attacking them differ. Libicki’s [39] definition also provides a taxonomy of Information Warfare, but divides the constituent operations by the environment in which they occur. He gives seven distinct types of operational behaviours that can be described as Information Warfare, all of which are “conflicts that involve the protection, manipulation, degradation and denial of information”. Libicki’s seven types of Information Warfare are: Command and Control Warfare, Information Based Warfare, Electronic Warfare, Psychological Warfare, Hacker Warfare, Economic Information Warfare and Cyberwarfare. Command and Control Warfare attacks the command and communications infrastructure of an opponent, in order to degrade its responses to further military action. Command facilities are destroyed to prevent military decision-making, while communications infrastructure is destroyed to prevent the flow of information between decision-makers and the troops implementing those decisions. Libicki points to the effectiveness of Command and Control Warfare by the United States against Iraqi forces as the main reason that the bulk of those forces were ineffectual during the first Gulf War. Information Based Warfare is the collection and use of information when planning and implementing military actions. A typical example is using information gained by reconnaissance to assess the effectiveness of previous military attacks or to determine the priority of targets for future strikes — i.e., increasing the situational awareness of the commander. Electronic Warfare attempts to degrade the physical basis of an opponent’s communications. There are three main targets for Electronic Warfare attacks, which are radar receivers, communication systems or communicated messages. Anti-radar attacks aim to prevent an opponent’s radar from detecting vehicles, using electronic or physical assaults. Communications systems may be electronically jammed or their physical infrastructure located and destroyed. Cryptography is used to conceal the contents of ones own communications and to reveal the contents of an opponent’s communications. Psychological Warfare is defined as the use of information against the human mind, and Libicki divides it into four sub-categories based upon its intended target. Counter-will operations target a country’s national will, aiming to transmit a deceptive message to an entire population. In a military context, messages typically suggest that the country’s present and future military operations are likely to fail. Counter-forces attacks target an opponent’s military troops, aiming to convince them that fighting is against their best interests. Counter-commander operations intend to confuse and disorient an opponent’s military commanders, detrimentally affecting their decision-making abilities. Cultural conflict targets an opponent’s entire culture, attempting to replace their traditions and beliefs with those of the attacker. Libicki states that while cultural conflict has a long history, its implementation is greatly aided by modern technology. Hacker Warfare consists of attacks against civilian computer networks and systems. Similar attacks against military computer networks Libicki instead categorises as Command and Control Warfare. Some aims of Hacker Warfare include the temporary or complete shutdown of computer systems, the introduction of random data errors, the theft of information or services and the injection of false message traffic. Libicki points out that the behaviours he categorises as Hacker Warfare encapsulate much of the actions that Schwartau defines as Information Warfare. Economic Information Warfare is defined as the attempt to control the flow of information between competing nations and societies. An Information Blockade attempts to prevent the real-time transfer of information by methods such as jamming and destruction of equipment. Libicki argues that this is difficult to achieve against a determined opponent. Information Imperialism occurs when knowledge-intensive industries become geographically concentrated, which disadvantages those without access to the region. Libicki cites Silicon Valley as an example of Information Imperialism. Libicki’s category of Cyberwarfare collects a variety of attacks which are currently unlikely or impossible. However, this term is commonly used by the media to describe acts which Libicki categorises as Hacker Warfare. One of these attacks is information terrorism, a type of computer hacking aimed at exploiting systems to attack individuals, which is similar to Schwartau’s Class I Information Warfare. Semantic attacks are another kind of Cyberwarfare, in which computer systems are given seemingly valid information that causes them to produce incorrect output, while appearing to be correct. Another is simula-warfare, in which simulated warfare replaces conventional warfare. Libicki argues any competitors who can agree to perform simulated warfare should be capable of negotiation to avoid conflict. Gibson-warfare is another unlikely possibility for Cyberwarfare, where a futuristic conflict occurs between virtual characters inside the system itself. Libicki argues that the current information infrastructure has not developed to the point where these attacks are possible and concedes that it never may in some cases. Libicki points out that Information Warfare is not a recent development and that some of its varieties, such as Psychological Warfare, have a long history in human conflict. He also notes that as the information space has developed due to technological changes, new methods of Information Warfare have evolved. While Libicki proposes seven plausibly distinct forms of Information Warfare, there is some functional overlap between similar attacks. For example, computer hacking may be considered either Hacker Warfare or Command and Control Warfare, depending on whether the attack targets a civilian or military system. Libicki is also dismissive of the effects of Information Warfare operations performed against non-military targets, such as Hacker Warfare. Whereas these operations do not directly deter military operations, their effects on the civilian population may reduce the political support for those responsible for military operations and thereby achieve military objectives. Attacks that lead to economic losses can also undermine a nation’s capability to wage warfare. Later work by Libicki [40] has focussed on the use of Information Warfare in Cyberspace, which is defined as any networked computer or communications system. While hostile attacks are the obvious method by which one may conquer cyberspace, Libicki proposes that friendly conquest is also possible. Friendly conquest recognises the power of seduction and develops from mutually beneficial relationships, in which one member becomes dependent upon the information systems or services provided by the other. Friendly conquest differs greatly from other hostile attacks, as it is entered into willingly by the victim in exchange for information or access to information systems that the victim values. Widnall and Fogelman [60] defined Information Warfare for the United States Air Force, describing it in a social context specifically oriented to military operations. Information is said to be the product of the perception and interpretation of phenomena, much as in Boisot’s definition. The acquisition, transmission, storage or transformation of information are described as information functions. They define Information Warfare as “any action to Deny, Exploit, Corrupt or Destroy the enemy’s information and its functions; protecting ourselves against those actions and exploiting our own military information functions” [60]. This covers both offensive and defensive Information Warfare. Widnall and Fogelman detail six types of offensive Information Warfare attacks. Psychological Operations use information to affect the enemy’s reasoning and thereby its behaviour. Electronic Warfare denies the enemy accurate information from the environment. Military Deception deceives the enemy as to the attacker’s capability or intentions. Physical Destruction targets the enemy’s information systems for destruction. Security Measures conceal the attacker’s military capabilities and intentions from the enemy. During an Information Attack an opponent’s information is directly corrupted without visibly changing its physical container. Of these offensive actions, only Information Attack is considered to be a recent development and not a traditional military operation; the others are as old as warfare itself. One explicit reason for the Air Force’s interest in Information Warfare is to enhance its ability to accomplish Air Force missions. Another reason is that the Air Force’s dependency on integrated information systems makes their information functions a desirable target for attack by opponents. This problem is no longer restricted to the Air Force and other large organisations, as most of modern societies have become dependant upon information systems for their daily operations. Kuehl [36] provides another military-oriented definition of Information Warfare that considers it in a social context: “Information operations conducted during time of crisis or conflict to achieve or promote specific objectives over a specific adversary or adversaries”. Information Operations are: “Actions taken to affect adversary information and information systems while defending [one’s] own information and information systems”. This implies Information Warfare is a series of offensive and defensive operations that either attack or defend information and information systems, aimed at a specific goal. The requirement that Information Warfare takes place during a crisis or conflict seems to imply that it is the exclusive domain of the military, which contradicts with Schwartau’s and Libicki’s definitions. Information Warfare is clearly beneficial to its users when applied in a strategic method by militaries during war [60, 36, 47], however, its usefulness in this role is debatable. Knowledgeable competitors will learn to expect Information Warfare attacks before and during military operations and attempt to defend against such strikes [40]. Once an entity reveals its Information Warfare capabilities, much of the surprise factor is lost and knowledgeable opponents will increase their defenses against similar attacks in the future. In this way Information Warfare targeted against information systems and communications networks approaches a coevolutionary race, as attackers locate and exploit flaws in these systems, while defenders attempt to correct these flaws as soon as they are observed. This reveals an interesting parallel between Information Warfare attacks and Information, in that unexpected attacks, like unexpected information, are more valuable than expected attacks or information. Denning’s [17] work again takes up Information Warfare in a social context, this time oriented toward information systems and computer security. However, Denning also notes that Information Warfare is not a recent human development, nor restricted to humans for that matter. She defines Information Warfare as offensive and defensive operations performed against information resources. Information resources are objects that operate upon information in some manner. In any Information Warfare operation there are at least two players, an offensive player who is targeting an information resource and a defensive player who protects the information resource from the operation. Players may be individuals or organisations, who may or may not be nation states and who may or may not be sponsored by others. Offensive players are broadly categorised as being either insiders, hackers, criminals, corporations, governments and terrorists. As every individual and organisation possesses information resources, every individual and organisation is said to be a potential Defensive player. Information resources are targeted because they are of some value to at least one player. Offensive Information Warfare operations aim to increase the value of an information resource to the attacker and decrease its value to the defender. This framework provides a game-theoretic outlook on Information Warfare, where players select offensive and defensive strategies which result in various outcomes with differing payoffs for the players. Denning states that there are three overall aims of offensive Information Warfare operations: to increase the availability of the information resource to the attacker; to decrease the availability of the resource to the defender; and to decrease the integrity of the information resource. These aims closely match Schwartau’s stated overall goals for Information Warfare, namely the theft, modification or destruction of information and the destruction of information infrastructure. Stealing or modifying information increases the availability of the information resource to the attacker. Modifying or destroying information decreases the integrity of the information resource. Destroying the information infrastructure decreases the availability of information resources to the defender. Information resources are protected from Information Warfare attacks by using defensive Information Warfare operations. Denning categorises defensive Information Warfare operations into: prevention, deterrence, indication and warning, detection, emergency preparedness and response. Examples include laws and policies that deter various Information Warfare operations, physical security measures that prevent access to information resources and procedures for dealing with the aftermath of a successful attack. Denning provides a comprehensive description of Information Warfare of both offensive and defensive kinds. The representation of Information Warfare in a game-theoretic manner importantly allows the application of Game Theory in analysing instances of Information Warfare. While the examples of Information Warfare focus on computer networks, communication systems and other modern information infrastructure, Denning acknowledges the presence of Information Warfare in evolutionary biology. Borden [7] took Widnall and Fogelman’s [60] definition of Information Warfare and mapped it onto Shannon’s model of information, producing a definition of Information Warfare that also places it in a social context, again with a military orientation. Widnall and Fogelman described four main offensive actions which could be performed against an adversary’s information and information infrastructure — Denial, Exploitation, Corruption and Destruction. Borden argues that these are the four main offensive operations of Information Warfare and that any action said to be Information Warfare may be categorised within one of these strategies. Degradation involves delaying the use of information or damaging it partially or completely. Thus, Degradation operates upon the information itself. Examples given are hiding information from an adversary’s collection task and jamming a communications channel, thereby delaying the transmission of messages. Corruption provides false information for the adversary or corrupts information that the enemy already possesses. Some examples are the use of dummies on the battlefield, spoofing transmissions on the adversary’s communications channel and Psychological Operations performed against the enemy or their allies. The use of dummies and spoofing transmissions attempts to supply corrupt information that the adversary will accept as valid, while Psychological Operations target information already possessed by the target. Denial is “a direct attack on the means of accomplishment”, meaning anything that the adversary uses for information collection and processing. Possibilities include the destruction or disabling of an electro-optic sensor by a High Energy Laser and a virus that destroys the operating system of a computer used by the enemy for decision-making. Denial attacks may either permanently destroy the targeted system or temporarily disable it. Exploitation is the collection of information directly from the adversary’s own information collection systems. The information collected may be useful for understanding the adversary’s point of view. The overall aims of these strategies match Denning’s three aims of Information Warfare, since Degradation and Denial both reduce the availability of information, while Corruption reduces the integrity of the information and Exploitation increases the availability of information to the attacker. Independently of Borden, Kopp [25] also generated four strategies for offensive Information Warfare attacks. However, Kopp started with Shannon’s model of communication and from this derived three types of offensive strategies that have different effects on the channel. These strategies are the Denial of Information, Deception and Mimicry, and Disruption and Destruction. A fourth strategy of Subversion is added, which utilises the channel for its communication to the victim. Kopp’s work explicitly considers Information Warfare in both social and biological systems, whereas previous analyses only described Information Warfare in a human context. Kopp argues that Information Warfare is a basic evolutionary adaptation resulting from competition for survival, which manifests itself in a variety of areas. Kopp draws examples of the proposed attack strategies from three different domains: the insect world, military electronic warfare, and cyberwar. A Denial of Information attack conceals or camouflages information from adversaries, preventing its collection and use. Examples include insects that blend into their environments, a stealth fighter which uses its shape and radar-absorbing material to hide from radar and the use of encryption to hide information from users of a computer system. Denial of Information attacks may be further categorised into either active or passive forms [31]. A passive form of attack attempts to conceal a signal from the victim’s receiver and is described as covert, with the victim unlikely to be aware of the attack. In an active form of attack, the receiver is blanketed by noise so that it cannot discern the signal from the noise. Active forms of attack are inherently overt, alerting the victim to the fact that it is being attacked. Deception and Mimicry attacks intentionally insert misleading information into a system, which the victim accepts as valid. For some examples: some harmless insects that mimic the appearance of dangerous predators; defensive jamming equipment on an aircraft emitting enemy radar returns with an erroneous position measurement; and techniques used to mask the identity of someone penetrating a network or system. Successful Deception and Mimicry attacks are inherently covert, as they are intended to leave the victim unaware that the information is misleading [31]. Disruption and Destruction describes overt attacks which either disrupt the activities of victim’s information system or destroy it outright, in order to prevent or delay the collection and processing of information. Examples include beetles that spray noxious fluids onto predators to blind them and an electromagnetic pulse weapon used to destroy a radar and its supporting communications network. A denial of service attack is an example of disruption in the cyberwar domain. Disruption and Destruction attacks are overt in nature, as the victim will notice that the effects of the attack on their information receiver [31]. Such attacks may also be further classified, based upon the permanence of the attacks, using existing military terms: “hard-kill” attacks are those that permanently destroy the information sensor, while “soft-kill” attacks temporarily disable the information sensor or system. Subversion attacks initiate a self-destructive behaviour in the victim’s system, caused by information inserted by the attacker. An example of this attack in insects is a predatory insect that mimics the appearance of food to lure prey. This deception triggers a self-destructive response from the victim. Subversion in aerial warfare can be achieved by the use of deceptive signals that trigger the premature detonation of proximity fuses on guided missiles. In cyberwar, logic bombs and viruses are examples of subversive weapons, in which the system uses its own resources to damage itself. Most examples of Subversion combine with a Deception and Mimicry attack to first insert the self-destructive signal into the victim [31].

Table 1: A comparison of Borden’s

and Kopp’s taxonomies of the

canonical Information Warfare strategies

Kopp’s categorisation of offensive Information Warfare strategies largely overlaps with Borden’s. Both describe four canonical offensive Information Warfare strategies, three of which are, for all intents and purposes, identical. However, these two models converged from very different starting points: Borden’s from Widnall and Fogelman and Kopp’s from Shannon’s information theory. Kopp’s “Denial of Information” attack is the same as Borden’s “Degradation” attack, both describing an attack that partially or completely conceals information from the victim. Kopp further categorises these attacks into passive attacks, which are covert, and active attacks, which are overt. Borden’s analysis also covers the temporary concealment of information, delaying the victim’s reception of information, as a method of Degradation. Borden’s “Corruption” strategy and Kopp’s “Deception and Mimicry” strategy describe the same behaviour, where a corrupted signal mimics a valid signal and the victim is unable to distinguish between the two. Both attacks aim to reduce the integrity of the information targeted. The “Denial” and “Disruption and Destruction” strategies also describe the same act, where the victim’s information collection and processing apparatus is temporarily or permanently disabled. Such attacks reduce the availability of information and related processing functions to the victim. Kopp’s “Subversion” strategy lacks any equivalent in Borden’s taxonomy. Kopp points out that this is due to Borden’s taxonomy following the United States Air Force’s convention of folding the “Subversion” strategy into the “Denial” strategy [31]. Subversion attacks aim to decrease the integrity of the victim’s information leading to its decision-making, and so causing it to act in a self-destructive manner. On the other hand, Borden’s “Exploitation” strategy is not present in Kopp’s taxonomy. Kopp [27] argues that since Exploitation does not “provide an immediate causal effect in the function of the target”, it cannot be an offensive Information Warfare attack. Instead, Exploitation is simply a passive information collection technique. Borden and Kopp’s models both describe four canonical offensive strategies of Information Warfare, within which any offensive Information Warfare attack may be categorised (Table 1). Henceforth, when further discussing these strategies, the shortest label will be used to identify the attack. Denning, Borden and Libicki identified three overall aims for Information Warfare operations and these aims should be achieved by the four canonical strategies described by Borden and Kopp. These aims are to increase the availability of the information resource to the attacker, to decrease the availability of the resource to the defender and to decrease the integrity of the information resource. Table 2 shows how the four canonical strategies are capable of achieving these three aims. It should be noted that Subversion attacks only achieve an aim when the unintentional behaviour they induce in the defender happens to achieve that aim.

Table 2: Canonical Information Warfare strategies (Borden, Kopp) and Aims of Information Warfare (Denning)

Table 3: Libicki’s categories of Information Warfare and the Canonical Information Warfare strategies which can implement them Libicki’s categories of possible types of Information Warfare can also be compared against the four canonical strategies to identify which attacks are utilised in each of these types of Information Warfare (Table 3). It is worth noting that Information Based Warfare utilises none of the four canonical strategies. This is because Information Based Warfare is an action similar to Borden’s Exploitation strategy and is therefore not an offensive act of Information Warfare. Subversion attacks only implement one of the types of Information Warfare when they cause an unintended action in the victim with whatever effect that type of Information Warfare requires. For example, Subversion will implement Command and Control Warfare if it incites the victim to damage its own communication systems, as in a Denial attack. A difficulty in using this

taxonomy to categorise attacks was initially identified when attempting

to differentiate between Corruption and Subversion attacks. A similar

differentiation problem was later observed between other pairs of

strategies — Subversion and Denial, Degradation and Corruption, and

Degradation and Destruction. This problem has been called “Mills’

Paradox” [32]. While labelled as a paradox, the problem is actually one

of classification, where the boundary conditions need to be precisely

defined and rigorously applied. Figure 4 shows these classification

problems between adjacent strategies, along with the boundary

conditions for differentiating between them. Figure 4: Mills’ Paradox, showing the links between the boundary conditions for classifying Information Warfare attacks (Kopp 2006)

Mills’ Paradox poses the following four questions:

The opposite corners of

Figure 4 can always be distinguished easily from each other:

Degradation attacks from Subversion attacks, and Corruption attacks

from Denial attacks. The same is not true for the remaining four

boundary conditions. Herein lies the “paradox”, in that of the six

boundaries, two are so distinct that no ambiguity exists, whereas the

remaining four require very careful analysis to establish exactly where

one strategy begins and the other ends. A useful observation in this

context is that Subversion and Corruption attacks are focussed on

information processing, whereas Degradation and Denial attacks are

focussed on the channel. Distinguishing a Subversion attack from a mimicking Corruption attack requires an understanding of how the victim processes the deceptive input message. In both a message is being used to deceive the target system to the advantage of the attacker. The distinction lies in whether the victim is responding to the attack voluntarily or involuntarily. A Corruption attack alters the victim’s perception of external reality and the victim then responds to the environmental change with a voluntary action of some kind. In the Subversion method of attack some involuntary internal mechanism is triggered to cause harm to the victim. Biological cases often prove difficult to separate. Distinguishing a destructive Subversion attack from a Denial attack can also be problematic, where the end state is a destructive hard kill of the victim. Superficially the effect is the same — the victim receiver or system is no longer operational. The distinction between these two attacks lies in whether the destruction of the victim was a result of the expenditure of energy by the attacker or by the victim itself. A destructive denial attack invariably sees the attacker delivering the destructive effect via external means, whereas subversion sees the victim deliver the destructive effect against itself, once triggered. The victim’s role in both attacks is involuntary. Distinguishing a passive Degradation attack from a mimicking Corruption attack can frequently present difficulties, especially in biological systems. The boundary condition is based upon whether the victim misidentifies the attacker or fails to perceive it at all. Mimicry which is designed to camouflage an attacker against the background noise is a passive degradation attack, since the victim cannot perceive the attacker. Distinguishing an active Degradation attack from a soft kill Denial attack may also be superficially difficult. In both instances the channel has been rendered unusable by an observable attack on the receiver. The boundary condition can be established by determining whether the receiver remains functional or not. An overloading of the receiver to deny its use is quite distinct from a channel which is unusable due to saturation with a jamming signal. Criteria for the boundary conditions are presented in Table 4. By contrast with these difficulties, when considering Degradation and Subversion attacks, or the Corruption and Denial attacks, respectively, it is clear that the respective effects of these paired strategies are mutually exclusive. Degradation requires a functional victim system to achieve its effect, but Subversion results in the destruction or serious functional impairment of the victim. The same dichotomy exists between Corruption and Denial, as a deception cannot be effected if the victim system loses its channel or receiver.

Table 4: The boundary conditions for differentiating between the canonical Information Warfare strategies It is also interesting to sort the strategies according to how covert they are. Passive forms of Degradation and all forms of Corruption are inherently covert. Conversely, both Denial and Subversion are ultimately overt, as the victim system is damaged or impaired in function. In a sense, the Denial and Subversion strategies are centred on damaging or impairing the victim’s apparatus for information gathering and processing, whereas the Degradation and Corruption strategies are centred on compromising the information itself. Information Warfare attacks

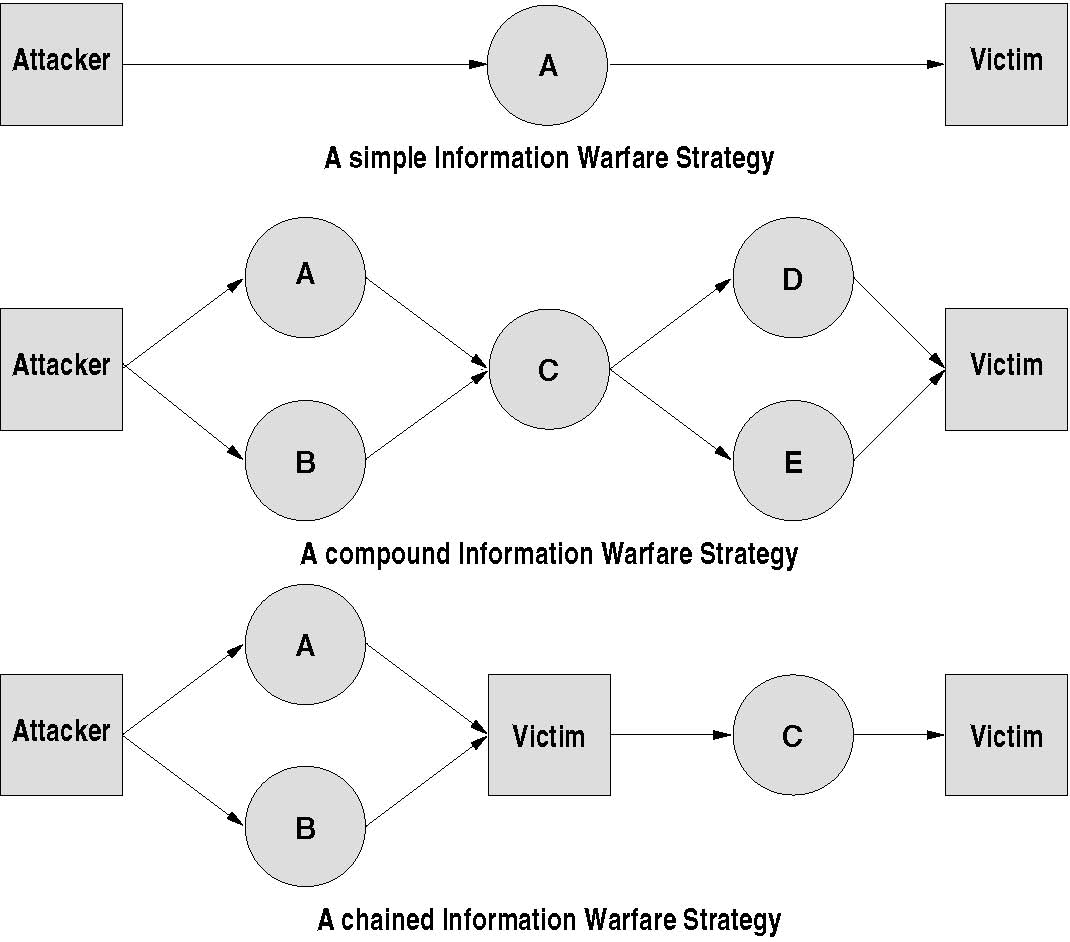

may be combined, forming a compound Information Warfare strategy [29].

A compound Information Warfare strategy is a partially-ordered directed

graph (network) of multiple attacks, which has a precedence

relationship

described by the graph's (network’s) structure. Each individual attack

is

designed to drive the victim towards an intended final state and may

have multiple predecessor and successor strategies. Overall success of

a compound strategy depends upon whether the victim’s end state matches

that intended by the attacker. As an example of a compound attack consider

a communication displaying “an indifference to what is real” as defined

by Frankfurt [0], intended not to inform but to distract, misdirect or

beguile. A specific instantiation of a communication

characterised by “an indifference to what is real” may implement an

adaptive compound strategy, which utilises typically some combination

of Degradation, Corruption and Subversion as a substitute for the

actual informative content of a message. Several examples are detailed

by Kopp in [28, 29, 30].

Kopp also defines the concept of a chained compound strategy, where an intermediate victim is used to propagate an attack against the final victim. An example is the exploitation of media organisations by terrorist movements, who use the media organisations to spread news of successful terrorist attacks. Figure 5 shows state transition diagrams for a canonical Information Warfare strategy, a compound Information Warfare strategy and a chained Information Warfare strategy. Since compound Information Warfare strategies form directed graphs with individual strategies and victims as nodes and dependencies as arcs, they can be analysed using graph theory. One property of interest is that of a cut vertex, the removal of which partitions the graph into two or more disconnected graphs [15]. For a compound strategy, cut vertex removal is akin to the failure of an Information Warfare attack. Such attacks represent a single point of failure for the entire compound strategy. Attackers may increase the redundancy of their compound strategy to remove any cut vertices, by adding additional functionally identical attacks, analogously to fault-tolerant computer system design. Defenders who are aware of a compound strategy’s structure may attempt to identify cut vertices and focus their defense against those Information Warfare attacks. Another property of interest is parallelisation: as in parallelising software execution, parallel attacks (as in A and B in the compound strategies of Figure 5) can be undertaken simultaneously.  Figure 5: Compound and Chained Information Warfare strategies (Kopp 2005) In the pursuit of a compound attack, the state of the victim is often crucial. If a predecessor strategy has failed to produce its intended effect, successor attacks in the compound strategy may be ineffective or even counter-productive by betraying the predecessor strategy. In a successful compound attack, the victim’s internal state steps though a series of discrete states reflecting the successful effects of each node (attack) in the compound strategy. This exposes a historically well documented problem in the execution of complex deceptions, as determining the effect of a previously executed strategy may be difficult or impossible. There are many common elements among the various definitions of Information Warfare. Information Warfare consists of offensive and defensive elements, in which one’s own information and information collection and processing functions are protected, while those of competitors are attacked. Information Operations may describe individual acts, while Information Warfare may describe an overall campaign of Information Operations against one or more competitors. Alternatively, each instance of Information Warfare may be called an Information Warfare attack. Information Warfare attacks may be performed by a single entity or a group of entities. From the definitions above, the following users of Information Warfare can be identified: citizens, governments, companies, criminals, countries, non-nation states, political groups and business cartels. Any of these may target their attacks within or across these groups. Criminals may perform Information Warfare attacks against companies, while political groups could attack and defend against attacks from other political groups. Information Warfare capabilities have also influenced the design of machines and systems, with many military examples, including stealth aircraft and visual camouflage schemes. Many if not most animal and plant species also utilise Information Warfare strategies to aid their survival and reproduction. Modern communication networks and computer systems have created a new environment for Information Warfare attacks. In consequence, Information Warfare has often incorrectly been described as a modern development. However, elements of Information Warfare are typically coextensive with human military conflict, including chronicled instances of strategic military deception and psychological warfare. This identifies a classical use of Information Warfare, recently extended to exploit new technology. We can anticipate that this will continue so long as technological advances continue. In biology, there is a clear parallel to co-adaptive arms races between predator and prey species. Narrow definitions of Information Warfare, restricting it to attacks involving computer systems or telecommunications networks or even simply to human activity, miss this broader significance of Information Warfare. A more appropriate concept allows for Information Warfare in any competitive environment where information processing takes place. Identifiable instances of Information Warfare both in human history and in nature support the broad definition [28]. The canonical Information

Warfare strategies provide a framework for categorising Information

Warfare attacks and for identifying the functional similarities between

what may appear to be quite different attacks. For example, a

camouflaged

insect and a stealth aircraft are both utilising the same canonical

strategy — Degradation — against potential observers to achieve the

same goal — concealment. |

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Shannon's Communication Theory and Information Warfare |

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

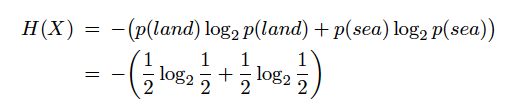

Borden [7] and Kopp [25] both assert that Information Warfare, being based upon the concept of information, should be analysed in terms of Shannon’s information theory. Borden argues that an action a decision-maker performs on data will reduce its uncertainty. Measuring the decision-maker’s uncertainty before and after this action will reveal the change in uncertainty, which may be measured in bits. An example is the case of

Paul Revere [7]. Revere was awaiting information regarding the movement

of British troops, who would move either by land or by sea. This

information was to be forwarded by Revere to the American

Revolutionaries. A lookout in a nearby church tower would observe the

British and report the method of their approach by showing “one lantern

if by land, two if by sea”. For Revere both approaches were equally

probable (p(land) = p(sea) = 1/2), so he had one bit of

uncertainty (Equation 3). Revere observed that two lanterns had been

lit, which informed him that the British were coming by sea, thereby

reducing his uncertainty. Revere’s uncertainty after receiving the

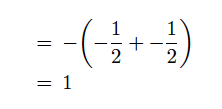

message is recalculated with p(land)=0, p(sea)=1 (with the

usual

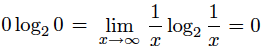

assumption that  As Equation 4 shows, Revere’s uncertainty was reduced to 0, and so the message reduced 1 bit of uncertainty.

Equation 3 also demonstrates that when one is equally free to choose between different messages, the amount of information in the transmitted message is maximised. On the other hand, Equation 4 shows that when the probability of a message is certain, the message contains no information. As the probability of a message being selected increases, the amount of information it contains decreases. It is clear that in Shannon’s definition of information, the key aspect of a message is its improbability. So, one feature of Information Warfare is the attacker’s attempt to reduce its own uncertainty or to increase the uncertainty of its target. Information Warfare may also be considered in terms of its effects on the capacity of an information channel. Kopp states that “Information Warfare in the most fundamental sense amounts to manipulation of a channel carrying information in order to achieve a specific aim in relation to an opponent engaged in a survival conflict” [27]. Borden [8] has described Information Warfare as a “battle for bandwidth”, in which there is a competition over the available capacity of an information channel. The canonical Information Warfare strategies can be explained in terms of how they each affect an information channel [25]. This is achieved by examining the effects of the strategies on the terms of Shannon’s channel capacity formula (Equation 2). An information channel’s capacity (C) can be reduced by decreasing its bandwidth (W), decreasing the power of the signal (P) or increasing the noise in the channel (N). The four canonical strategies may all be understood as fundamental modes of attack against a victim’s communications channel. Again, all forms of attack on a communications channel can be mapped onto the four canonical strategies, or some compound strategy arising from two or more canonical strategies. Numerous specialised forms of attack exist, and many have to date been mapped into compound or canonical strategies. Two assumptions are made in this model. The first is that the victim receiver can wholly understand and thus decode the messages it receives, which may or may not be true in general. The second is that some repeatable mapping exists between a message, background noise and the quantitative measures of P and N. A basis for establishing such a mapping lies in Shannon entropy, which shows that a message with an entirely predictable content has no information content [52]:

where I(m) is the information content

of the message and